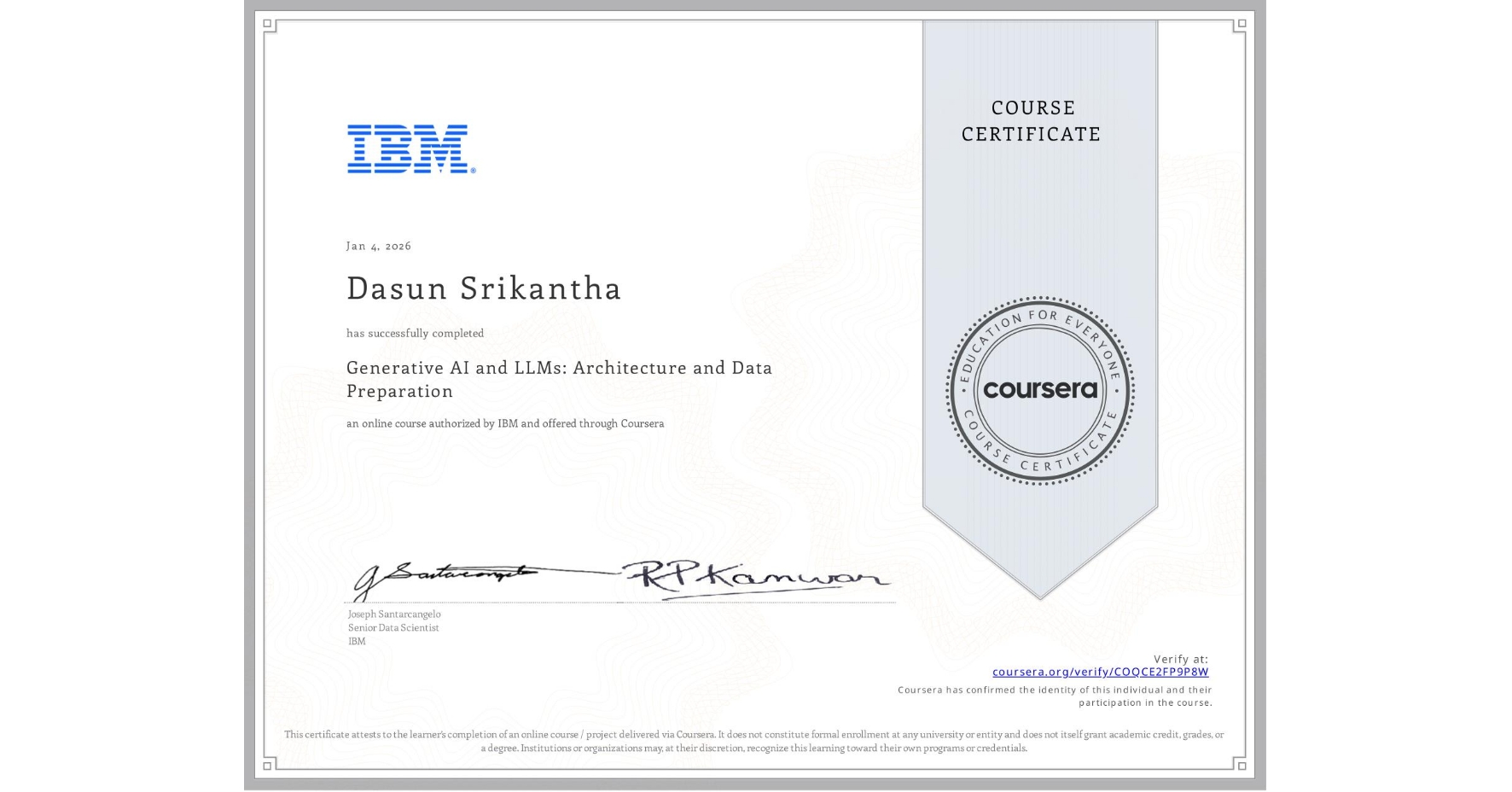

Generative AI and LLMs: Architecture and Data Preparation

Completed by Dasun Srikantha

January 4, 2026

5 hours (approximately)

Dasun Srikantha 's account is verified. Coursera certifies their successful completion of Generative AI and LLMs: Architecture and Data Preparation

What you will learn

Differentiate between generative AI architectures and models, such as RNNs, transformers, VAEs, GANs, and diffusion models

Describe how LLMs, such as GPT, BERT, BART, and T5, are applied in natural language processing tasks

Implement tokenization to preprocess raw text using NLP libraries like NLTK, spaCy, BertTokenizer, and XLNetTokenizer

Create an NLP data loader in PyTorch that handles tokenization, numericalization, and padding for text datasets

Skills you will gain

- Category: Data Preprocessing

- Category: Large Language Modeling

- Category: Artificial Intelligence

- Category: Natural Language Processing

- Category: Hugging Face

- Category: Generative Adversarial Networks (GANs)

- Category: Recurrent Neural Networks (RNNs)

- Category: Generative AI

- Category: Generative Model Architectures

- Category: PyTorch (Machine Learning Library)

- Category: Text Mining

- Category: Data Pipelines