Étude de cas - Prévoir les prix des logements Dans notre première étude de cas, qui porte sur la prévision des prix des logements, vous allez créer des modèles qui prédisent une valeur continue (le prix) à partir de caractéristiques d'entrée (superficie, nombre de chambres et de salles de bains,...). Ce n'est là qu'un des nombreux domaines d'application de la régression. D'autres applications vont de la prédiction des résultats de santé en médecine, du cours des actions en finance, de l'utilisation de la puissance dans les ordinateurs à haute performance, à l'analyse des régulateurs importants pour l'expression des gènes. Dans ce cours, vous explorerez les modèles de régression linéaire régularisée pour les tâches de prédiction et de sélection des caractéristiques. Vous serez en mesure de traiter de très grands ensembles de caractéristiques et de choisir entre des modèles de complexité variable. Vous analyserez également l'impact de certains aspects de vos données - tels que les valeurs aberrantes - sur les modèles et les prédictions que vous aurez sélectionnés. Pour ajuster ces modèles, vous mettrez en œuvre des algorithmes d'optimisation qui s'adaptent aux grands ensembles de données. Objectifs pédagogiques : A la fin de ce cours, vous serez capable de : -Décrire l'entrée et la sortie d'un modèle de régression -Comparer et opposer le biais et la variance lors de la modélisation des données -Estimer les paramètres du modèle en utilisant des algorithmes d'optimisation -Ajuster les paramètres avec la validation croisée -Analyser la performance du modèle -Décrire la notion de rareté et comment LASSO conduit à des solutions rares -Déployer des méthodes pour sélectionner entre les modèles -Exploiter le modèle pour former des prédictions.

Profitez d'une croissance illimitée avec un an de Coursera Plus pour 199 $ (régulièrement 399 $). Économisez maintenant.

Apprentissage automatique : Régression

Ce cours fait partie de Spécialisation Apprentissage automatique

Instructeurs : Emily Fox

166 076 déjà inscrits

Inclus avec

(5,581 avis)

Compétences que vous acquerrez

- Catégorie : Évaluation de modèles

- Catégorie : Algorithmes

- Catégorie : Programmation en Python

- Catégorie : Apprentissage supervisé

- Catégorie : Prétraitement de données

- Catégorie : Analyse de régression

- Catégorie : Modélisation prédictive

- Catégorie : Ingénierie des caractéristiques

- Catégorie : Modélisation statistique

- Catégorie : Apprentissage automatique

Détails à connaître

Ajouter à votre profil LinkedIn

15 devoirs

Découvrez comment les employés des entreprises prestigieuses maîtrisent des compétences recherchées

Élaborez votre expertise du sujet

- Apprenez de nouveaux concepts auprès d'experts du secteur

- Acquérez une compréhension de base d'un sujet ou d'un outil

- Développez des compétences professionnelles avec des projets pratiques

- Obtenez un certificat professionnel partageable

Il y a 8 modules dans ce cours

La régression est l'un des outils statistiques et d'apprentissage automatique les plus importants et les plus largement utilisés. Elle vous permet de faire des prédictions à partir de données en apprenant la relation entre les caractéristiques de vos données et une réponse observée, à valeur continue. La régression est utilisée dans un grand nombre d'applications allant de la prédiction des cours de la bourse à la compréhension des réseaux de régulation des gènes.<p>Cette introduction au cours vous donne un aperçu des sujets que nous allons couvrir et des connaissances et ressources de base que nous supposons que vous possédez.

Inclus

5 vidéos4 lectures

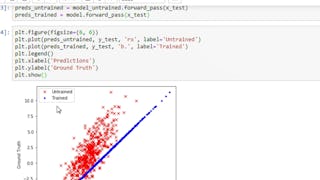

Notre cours commence par le modèle de régression le plus élémentaire : L'ajustement d'une ligne aux données. Dans ce module, nous décrivons les tâches de régression de haut niveau, puis nous spécialisons ces concepts dans le cas de la régression linéaire simple. Vous apprendrez à formuler un modèle de régression simple et à l'ajuster aux données en utilisant à la fois une solution de forme fermée et un algorithme d'optimisation itératif appelé descente de gradient. Sur la base de cette fonction ajustée, vous interpréterez les paramètres estimés du modèle et ferez des prédictions. Vous analyserez également la sensibilité de votre ajustement aux observations aberrantes.<p> Vous examinerez tous ces concepts dans le contexte d'une étude de cas portant sur la prédiction des prix des maisons à partir des pieds carrés de la maison.

Inclus

25 vidéos5 lectures2 devoirs

L'étape suivante pour aller au-delà de la simple régression linéaire consiste à envisager la "régression multiple", où plusieurs caractéristiques des données sont utilisées pour former des prédictions. <Plus précisément, dans ce module, vous apprendrez à construire des modèles de relations plus complexes entre une variable unique (par exemple, "pieds carrés") et la réponse observée (comme le "prix de vente de la maison"). Il s'agit notamment d'ajuster un polynôme à vos données ou de capturer les variations saisonnières de la valeur de la réponse. Vous apprendrez également à incorporer plusieurs variables d'entrée (par exemple, "pieds carrés", "nombre de chambres", "nombre de salles de bain"). Vous serez ensuite en mesure de décrire comment tous ces modèles peuvent encore s'inscrire dans le cadre de la régression linéaire, mais en utilisant désormais des "caractéristiques" multiples. Dans ce cadre de régression multiple, vous adapterez les modèles aux données, interpréterez les coefficients estimés et ferez des prédictions. <Vous mettrez également en œuvre un algorithme de descente de gradient pour l'ajustement d'un modèle de régression multiple.

Inclus

19 vidéos5 lectures3 devoirs

Après avoir étudié les modèles de régression linéaire et les algorithmes d'estimation des paramètres de ces modèles, vous êtes maintenant prêt à évaluer l'efficacité de la méthode que vous avez envisagée pour prédire de nouvelles données. Vous êtes également prêt à sélectionner parmi les modèles possibles celui qui est le plus performant. <Ce module traite de ces sujets importants que sont la sélection et l'évaluation des modèles. Vous examinerez les aspects théoriques et pratiques de ces analyses. Vous explorerez d'abord le concept de mesure de la "perte" de vos prédictions et l'utiliserez pour définir l'erreur d'apprentissage, de test et de généralisation. Pour ces mesures d'erreur, vous analyserez comment elles varient en fonction de la complexité du modèle et comment elles peuvent être utilisées pour former une évaluation valide de la performance prédictive. Cela conduit directement à une conversation importante sur le compromis biais-variance, qui est fondamental pour l'apprentissage automatique. Enfin, vous concevrez une méthode permettant d'abord de sélectionner des modèles, puis d'évaluer les performances du modèle sélectionné. <Les concepts décrits dans ce module sont essentiels pour tous les problèmes d'apprentissage automatique, bien au-delà du cadre de la régression abordé dans ce cours.

Inclus

14 vidéos2 lectures2 devoirs

Vous avez examiné comment la performance d'un modèle varie en fonction de sa complexité, et vous pouvez décrire l'écueil potentiel des modèles complexes qui deviennent suradaptés aux données d'apprentissage. Dans ce module, vous explorerez une technique très simple, mais extrêmement efficace, pour résoudre automatiquement ce problème. Cette méthode s'appelle la "régression ridge". Vous partez d'un modèle complexe, mais vous l'ajustez d'une manière qui non seulement incorpore une mesure d'ajustement aux données d'apprentissage, mais aussi un terme qui biaise la solution en l'éloignant des fonctions surajustées. À cette fin, vous explorerez les symptômes des fonctions surajustées et les utiliserez pour définir une mesure quantitative à utiliser dans votre objectif d'optimisation révisé. Vous dériverez un algorithme de forme fermée et de descente de gradient pour l'ajustement de l'objectif de régression de crête ; ces formes sont de petites modifications des algorithmes originaux que vous avez dérivés pour la régression multiple. Pour sélectionner l'importance du biais par rapport au surajustement, vous explorerez une méthode générale appelée "validation croisée". <Vous mettrez en œuvre la validation croisée et la descente de gradient pour ajuster un modèle de régression de crête et sélectionner la constante de régularisation.

Inclus

16 vidéos5 lectures3 devoirs

Une tâche fondamentale de l'apprentissage automatique consiste à sélectionner un ensemble de caractéristiques à inclure dans un modèle. Dans ce module, vous explorerez cette idée dans le contexte de la régression multiple et décrirez comment cette sélection de caractéristiques est importante à la fois pour l'interprétabilité et l'efficacité de la formation des prédictions. <Pour commencer, vous examinerez les méthodes qui recherchent une énumération de modèles comprenant différents sous-ensembles de caractéristiques. Vous analyserez à la fois les algorithmes de recherche exhaustive et les algorithmes gourmands. Ensuite, au lieu d'une énumération explicite, nous nous tournons vers la régression Lasso, qui effectue implicitement une sélection des caractéristiques d'une manière proche de la régression ridge : Un modèle complexe est ajusté sur la base d'une mesure d'ajustement aux données d'apprentissage et d'une mesure de surajustement différente de celle utilisée dans la régression ridge. Cette méthode lasso a eu un impact dans de nombreux domaines d'application et les idées qui la sous-tendent ont fondamentalement changé l'apprentissage automatique et les statistiques. Vous mettrez également en œuvre un algorithme de descente de coordonnées pour l'ajustement d'un modèle Lasso. <La descente de coordonnées est une autre technique d'optimisation générale, utile dans de nombreux domaines de l'apprentissage automatique.

Inclus

22 vidéos4 lectures3 devoirs

Jusqu'à présent, nous nous sommes concentrés sur les méthodes qui ajustent des fonctions paramétriques (comme les polynômes et les hyperplans) à l'ensemble des données. Dans ce module, nous nous intéressons plutôt à une classe de méthodes "non paramétriques". Ces méthodes permettent à la complexité du modèle d'augmenter au fur et à mesure que les données sont observées, et aboutissent à des ajustements qui s'adaptent localement aux observations. <Nous commençons par examiner un exemple simple et intuitif de méthodes non paramétriques, la régression du plus proche voisin : La prédiction d'un point d'interrogation est basée sur les sorties des observations les plus proches dans l'ensemble d'apprentissage. Cette approche est extrêmement simple, mais peut fournir d'excellentes prédictions, en particulier pour les grands ensembles de données. Vous allez déployer des algorithmes pour rechercher les voisins les plus proches et former des prédictions basées sur les voisins découverts. En partant de cette idée, nous nous tournons vers la régression par noyau. Au lieu de former des prédictions basées sur un petit ensemble d'observations voisines, la régression par noyau utilise toutes les observations de l'ensemble de données, mais l'impact de ces observations sur la valeur prédite est pondéré par leur similarité avec le point d'interrogation. Vous analyserez les performances théoriques de ces méthodes dans la limite d'une infinité de données d'apprentissage et explorerez les scénarios dans lesquels ces méthodes fonctionnent bien ou mal. Vous mettrez également en œuvre ces techniques et observerez leur comportement pratique.

Inclus

13 vidéos2 lectures2 devoirs

Dans la conclusion du cours, nous récapitulerons ce que nous avons couvert. Cela représente à la fois des techniques spécifiques à la régression, ainsi que des concepts d'apprentissage machine fondamentaux qui apparaîtront tout au long de la spécialisation. Nous discutons également brièvement de certaines techniques de régression importantes que nous n'avons pas couvertes dans ce cours.<p>Nous concluons avec un aperçu de ce qui vous attend dans le reste de la spécialisation.

Inclus

5 vidéos1 lecture

Obtenez un certificat professionnel

Ajoutez ce titre à votre profil LinkedIn, à votre curriculum vitae ou à votre CV. Partagez-le sur les médias sociaux et dans votre évaluation des performances.

Instructeurs

Offert par

En savoir plus sur Apprentissage automatique

Statut : Essai gratuit

Statut : Essai gratuit Statut : Prévisualisation

Statut : Prévisualisation

Coursera

Pour quelles raisons les étudiants sur Coursera nous choisissent-ils pour leur carrière ?

Avis des étudiants

5 581 avis

- 5 stars

80,92 %

- 4 stars

15,89 %

- 3 stars

1,88 %

- 2 stars

0,46 %

- 1 star

0,84 %

Affichage de 3 sur 5581

Révisé le 4 mai 2020

Excellent professor. Fundamentals and math are provided as well. Very good notebooks for the assignments...it’s just that turicreate library that caused some issues, however the course deserves a 5/5

Révisé le 30 août 2016

it's a nice course. I have learnt many new concepts. I am from information systems background and want my career towards data science. This course helped me a lot in learning new concepts.

Révisé le 11 juin 2016

This course start from problems. So this great to motivate the content and let student know why. However, there are lot of confusion questions that lead to miss understand the exercise problems.

Ouvrez de nouvelles portes avec Coursera Plus

Accès illimité à 10,000+ cours de niveau international, projets pratiques et programmes de certification prêts à l'emploi - tous inclus dans votre abonnement.

Faites progresser votre carrière avec un diplôme en ligne

Obtenez un diplôme auprès d’universités de renommée mondiale - 100 % en ligne

Rejoignez plus de 3 400 entreprises mondiales qui ont choisi Coursera pour les affaires

Améliorez les compétences de vos employés pour exceller dans l’économie numérique

Foire Aux Questions

Pour accéder aux supports de cours, aux devoirs et pour obtenir un certificat, vous devez acheter l'expérience de certificat lorsque vous vous inscrivez à un cours. Vous pouvez essayer un essai gratuit ou demander une aide financière. Le cours peut proposer l'option "Cours complet, pas de certificat". Cette option vous permet de consulter tous les supports de cours, de soumettre les évaluations requises et d'obtenir une note finale. Cela signifie également que vous ne pourrez pas acheter un certificat d'expérience.

Lorsque vous vous inscrivez au cours, vous avez accès à tous les cours de la spécialisation et vous obtenez un certificat lorsque vous terminez le travail. Votre certificat électronique sera ajouté à votre page Réalisations - de là, vous pouvez imprimer votre certificat ou l'ajouter à votre profil LinkedIn.

Oui, pour certains programmes de formation, vous pouvez demander une aide financière ou une bourse si vous n'avez pas les moyens de payer les frais d'inscription. Si une aide financière ou une bourse est disponible pour votre programme de formation, vous trouverez un lien de demande sur la page de description.

Plus de questions

Aide financière disponible,